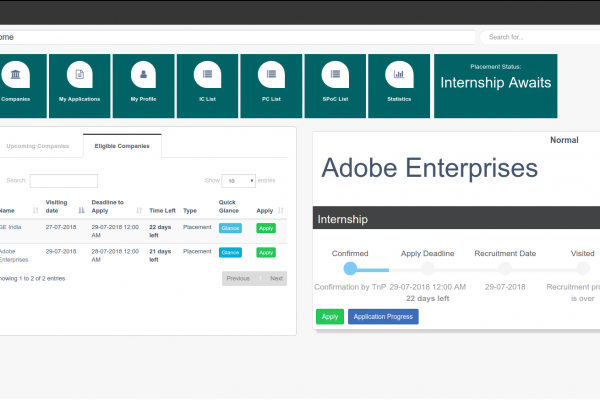

Every academic year, the hostel and mess allotment process at our institute is one of the most anticipated and crucial tasks. Traditionally, this process was conducted offline, requiring students to visit the Hostel Office in person to secure their accommodation. This meant long lines, crowded offices, and students needing to arrive on campus earlier, often causing significant inconvenience and stress to both the students and the authorities involved.

Recognizing these challenges, we transitioned to an online, first-come, first-served allotment process through the IRIS platform.

For the past few years, we have conducted the hostel allotment online. However, these attempts faced numerous new challenges and hassles, which we navigated with a lot of struggle and support from the Council of Wardens and the Hostel Office. There were system issues, crashes and delays in starting the process, causing frustration among students. Given the stakes involved -students eager to secure their preferred rooms and messes, ensuring a smooth and efficient allotment process was our top priority. The IRIS platform had to handle thousands of simultaneous requests as students rushed to book their hostel rooms. This surge in traffic posed a substantial challenge, requiring our systems to perform under high load conditions.

Our team anticipated this and took extensive measures to prepare for this situation, ensuring that our servers could manage the peak load without any hiccups. The meticulous planning and robust system setup allowed us to navigate the high-demand period smoothly, providing a seamless experience for all users involved.

A Basic Overview of our setup

We use multiple load balancers both internally and externally to manage and direct the load and prevent any single point of failure. Our application is built on Ruby on Rails, a versatile web application framework, and we utilize MariaDB, an open source relational database, to store the data. It is currently a single master system, where all the write operations are handled by the primary database server. Our services are hosted on a locally maintained Nutanix cluster which provides a robust infrastructure capable of supporting our current needs.

Here’s a behind-the-scenes look at how we prepared for and executed this essential process using our existing infrastructure:

Rigorous Load Testing for Seamless Performance

What is load testing?

Load testing is the process of testing applications by simulating and monitoring an estimated system load. It is an effective way to find bottlenecks or any shortcomings that could be present in the system or the application.

As with every application, IRIS has faced some encountered performance issues under heavy load in previous years. To prevent these problems and ensure a seamless operation this time, we took a thorough load testing procedure to ensure a smooth operation in high load conditions. Over a few weeks, we rigorously tested the hostel allotment endpoints for the same. This enabled us to serve our end users with a reliable application that would behave as expected at any moment.

Implementation

To conduct the load testing, we leveraged k6 from Grafana Labs

Here’s a brief overview of our setup and tools:

- k6: Our primary load testing tool.

- Prometheus and InfluxDB: These were used to bring up data and logs from the k6 containers to the Grafana dashboard.

- Node-Exporter: Utilized to monitor the vitals of the load generator, ensuring no bottlenecks from the load generator, especially on the network front.

Why k6?

We had chosen k6 as our load testing tool for several important reasons. K6 allowed us to get started with our load testing process quickly. Thus, we could focus on the main test logic rather than worrying about how the tool must be set up. K6 also had out-of-the-box support for stages and parallel connections, which we had utilized extensively throughout our testing process. Moreover, k6 scripts are written in JavaScript, which is widely used, making it easier to work with.

A brief overview of the test

Our scripts were mainly focused on only the hostel allotment’s endpoints. We had a number of test users set up for the sole purpose of testing. We also created a test hostel whose rooms we used to test the user load. Docker containers were set up for ease of replication of the tests. We also had some scripts for frontend tests that would estimate the load not only on the allotment endpoints but also on the endpoints from where the assets would be served.

The scripts would choose a random user and a random room from the list of users and rooms that we had created and then try allocating the user to the hostel that we created for testing. The script authenticates the user and then uses the user’s specific credentials to further access the hostel allotments endpoint, and then a request is made to allot the user into the hostel room. We also simulated retries to make it as close to real life as possible.

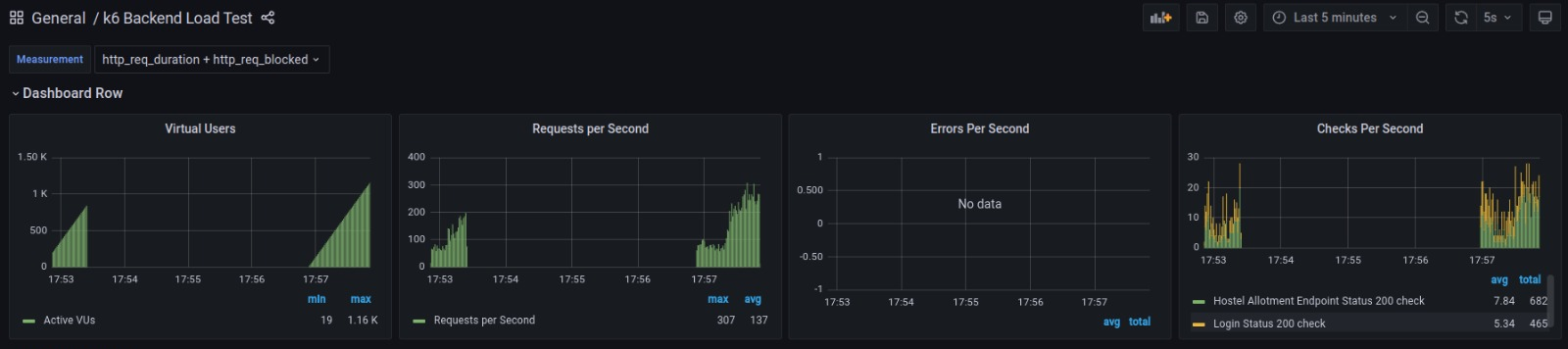

Here’s a quick summary of a few stats from one of our earlier tests:

The Grafana Dashboard template that we have, shows us some vital information like virtual users, rate of requests, errors and checks. From the tests above, you can see that in this particular test, at this point, we had simulated over 1.36k users. All of these users connect to our application in parallel. We had some checks set in place for ensuring that if a user has logged in and if the user has been allotted.

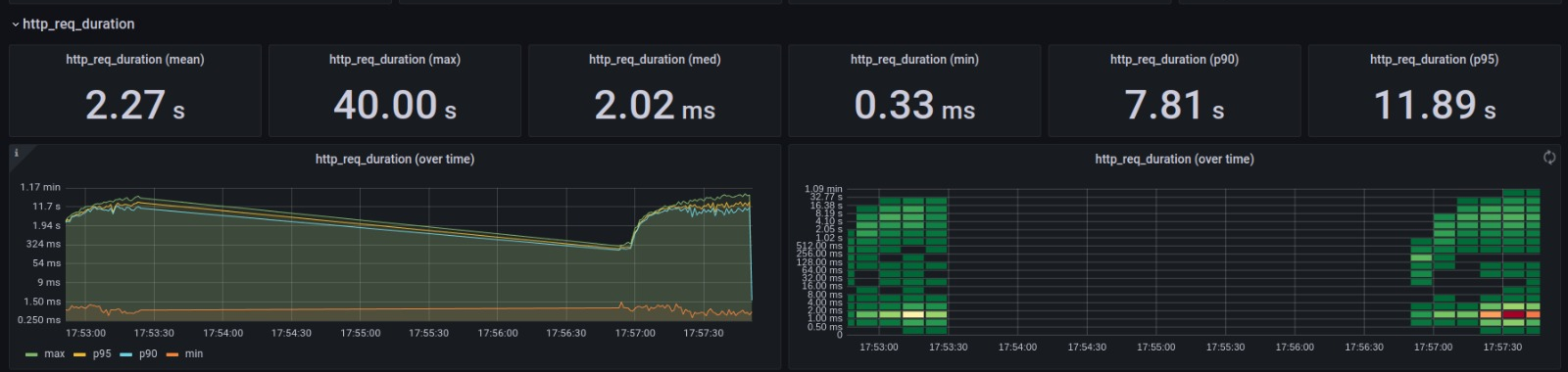

This provides us with some insights of the HTTP requests that were sent during the tests. The graphs show us the min, max and p90 HTTPS requests’ duration among others over the duration of the test.

We measured all traffic in the application, resource utilization and throughput rates to identify the application’s breaking point and any other overlooked shortcomings. We were comfortably able to handle over 2.5k users accessing our application simultaneously. Every single error, crash or slow down during the testing was observed and investigated in depth. We made necessary adjustments to the configurations of the application and the services it depended on, to ensure that there were no more bottlenecks and the application performs exactly as expected at any point of time.

We also ran frontend tests for the hostel allotments and addressed issues with asset fetching during these tests. Although they weren’t as successful as the backend tests we conducted, they now serve as a good foundation for us to develop further and improve our frontend testing. With further development in frontend testing, we would be able to test our application on much closer real-life situations.

Future Enhancements and Testing Strategies

Going forward, we may implement testing for all major modules and maybe even integrate some basic tests in the CI/CD pipeline of the modules we work on. This would help us mitigate all those issues/bugs that may have been overlooked during the development process, guaranteeing a smooth experience for our end users.

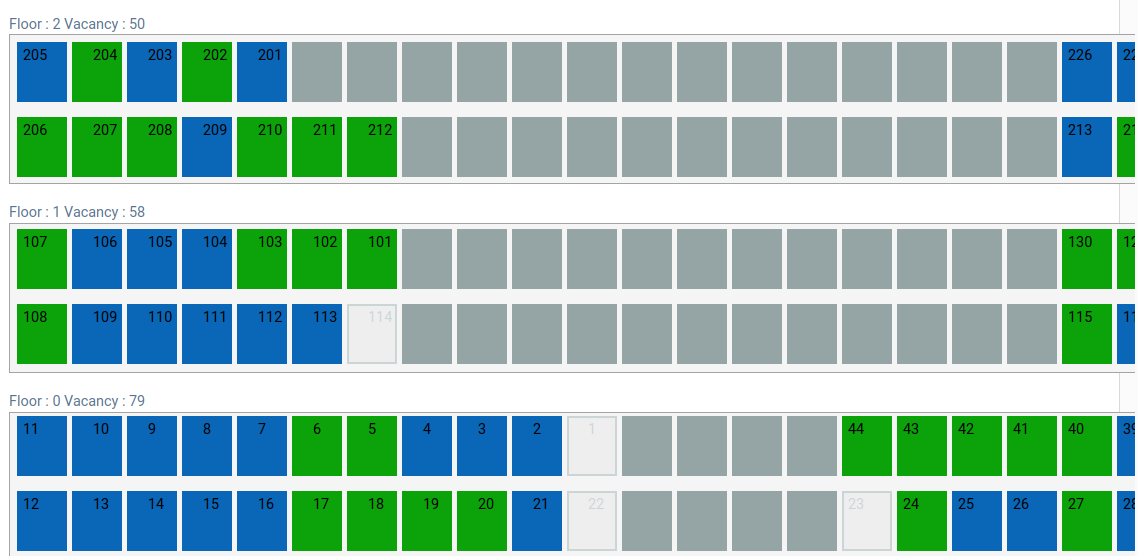

Strategic Slot-Based Allotment for Efficient Management

The results of the load testing helped us understand the theoretical maximum load our system could handle. We realized that handling allotments for 5000+ students together might not be the best of approaches. Therefore to handle this and manage better we devised a slot-based system where different batches would have staggered start times. This was crucial because the initial surge when the allotment opened caused the highest load on our servers. By having different start times, we ensured that no single moment would overwhelm the system.

We worked closely with the Council of Wardens to determine the best slots according to their plan, ensuring fairness and efficiency. Each batch had specific rooms reserved for their slot, so no one’s chances of securing a preferred room were compromised by the staggered timings. This collaborative planning ensured a balanced distribution of the load and a smooth allotment process for everyone involved.

Addressing Issues in Real Time and Contingency Plan

While we had meticulously prepared for a smooth process, unforeseen issues can arise. To manage such scenarios, we had implemented a robust contingency plan and were monitoring actively.

In the unlikely event of significant problems during the allotment process, our primary contingency measure was to remove the IRIS record from the Load Balancer (LB) and restore the database from the most recent backup taken just before the allotment began. This strategy would allow us to quickly mitigate disruptions and resume other normal operations with minimal delay.

Following the restoration, we could immediately engage in debugging both the application and database to identify and resolve any issues or queries causing blockages. Throughout the allotment process, our team closely monitored the system where we utilized Sentry, which provided real-time server error tracking and Nutanix to monitor our Virtual machines’ real time statistics, to ensure prompt identification and resolution of any unforeseen problems, thereby maintaining the integrity of the process.

We had over 2.38 lakh hits on the hostels controllers itself over the duration of the allotments.

Here’s a brief of the stats:

- CPU and Memory: The peak load on the processor was 95% while the memory usage of the server never went above 72.5%.

- Controller IOPS: Shows us the number of input/output operations, i.e, read or write operations, that happen per second. We clocked a peak of 69 IOPS.

- Controller Avg IO Latency: We had a peak average latency of about 18ms, which represents the time from when a IO request is made and a response is received for the same.

- CPU Ready Time: It represents the amount of time spent by a VM waiting for the hypervisor to schedule a physical process when it is ready to process a task. It is either shown in milliseconds or as a percentage. The CPU ready percentage is the percentage of time the CPU spends waiting relative to the time it is run. A lower CPU ready percentage is beneficial for an application. We had a peak CPU Ready percentage of 0.72% which means our processors spent very little time waiting.

The peaks correspond to the moment when the hostel allotments were enabled, as we saw a huge influx of users at once. As evident from the stats, there were no abnormalities during the allotment period, and everything remained under control.

Conclusion

The successful execution of this year’s hostel allotment process was a testament to our meticulous planning and the collaborative effort of all involved. The testing of the application beforehand helped us fix the issues that may have gone unfound or, worse, would have been found only when the application was subjected to such high loads. This proactive approach enabled us to handle high traffic efficiently, ensuring a smooth experience for all.

We would like to extend our heartfelt thanks to Dr. Pushparaj Shetty D (Prof-in-charge, Hostels), Hostel Office, all the Hostel Block Wardens, and Quality and Maintenance Wardens for their invaluable support and guidance throughout this process. Their cooperation in sharing the allotment plans on time, timely communication for any changes, and most importantly, putting faith in us -a student-led team, to carry out this task were crucial to our success.

Special thanks to our dedicated team members K Vinit Puranik, Devaansh Kumar, Utkarsh Mahajan and our alumnus mentor Sushanth Sathesh Rao for their exceptional efforts in conducting rigorous load testing and ensuring the platform’s readiness, and Vedant Tarale, Fahim Ahmed and Dhruva N for their crucial roles in communicating requirements, setting up the module configurations, monitoring, and managing issues throughout the process.

We look forward to continued improvements and innovations at IRIS, always striving to enhance the experience for everyone involved.

It was tense moment for me and other hostel wardens when the room booking module was opened. I was relaxed to hear that it went very smoothly. The meticulous planning by the team and learning from past experience has made all the difference. IRIS team, NITK has done a commendable job and deserve utmost appreciation. Thanks to Dr Mandeep Singh, my colleague for coordinating with team.